abcpy package¶

This reference gives details about the API of modules, classes and functions included in ABCpy.

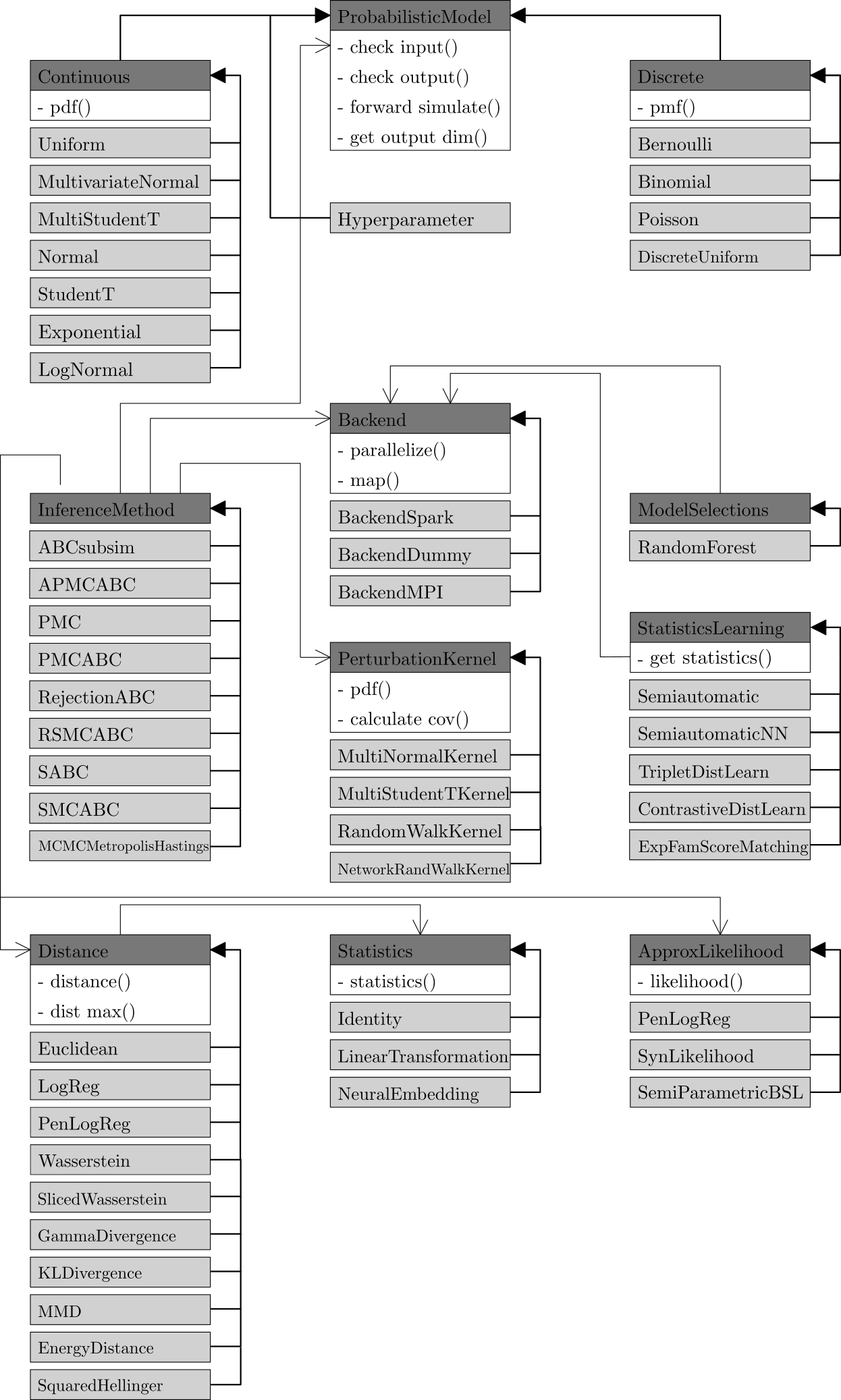

The following diagram shows selected classes with their most important

methods. Abstract classes, which cannot be instantiated, are highlighted in

dark gray and derived classes are highlighted in light gray. Inheritance is

shown by filled arrows. Arrows with no filling highlight associations, e.g.,

Distance is associated with Statistics

because it calls a method of the instantiated class to translate the input data to summary statistics.

abcpy.acceptedparametersmanager module¶

-

class

abcpy.acceptedparametersmanager.AcceptedParametersManager(model)[source]¶ Bases:

object-

__init__(model)[source]¶ This class manages the accepted parameters and other bds objects.

Parameters: model (list) – List of all root probabilistic models

-

broadcast(backend, observations)[source]¶ Broadcasts the observations to observations_bds using the specified backend.

Parameters: - backend (abcpy.backends object) – The backend used by the inference algorithm

- observations (list) – A list containing all observed data

-

update_kernel_values(backend, kernel_parameters)[source]¶ Broadcasts new parameters for each kernel

Parameters: - backend (abcpy.backends object) – The backend used by the inference algorithm

- kernel_parameters (list) – A list, in which each entry contains the values of the parameters associated with the corresponding kernel in the joint perturbation kernel

-

update_broadcast(backend, accepted_parameters=None, accepted_weights=None, accepted_cov_mats=None)[source]¶ Updates the broadcasted values using the specified backend

Parameters: - backend (abcpy.backend object) – The backend to be used for broadcasting

- accepted_parameters (list) – The accepted parameters to be broadcasted

- accepted_weights (list) – The accepted weights to be broadcasted

- accepted_cov_mats (np.ndarray) – The accepted covariance matrix to be broadcasted

-

get_mapping(models, is_root=True, index=0)[source]¶ Returns the order in which the models are discovered during recursive depth-first search. Commonly used when returning the accepted_parameters_bds for certain models.

Parameters: - models (list) – List of the root probabilistic models of the graph.

- is_root (boolean) – Specifies whether the current list of models is the list of overall root models

- index (integer) – The current index in depth-first search.

Returns: The first entry corresponds to the mapping of the root model, as well as all its parents. The second entry corresponds to the next index in depth-first search.

Return type: list

-

get_accepted_parameters_bds_values(models)[source]¶ Returns the accepted bds values for the specified models.

Parameters: models (list) – Contains the probabilistic models for which the accepted bds values should be returned Returns: The accepted_parameters_bds values of all the probabilistic models specified in models. Return type: list

-

abcpy.approx_lhd module¶

-

class

abcpy.approx_lhd.Approx_likelihood(statistics_calc)[source]¶ Bases:

objectThis abstract base class defines the approximate likelihood function.

-

__init__(statistics_calc)[source]¶ The constructor of a sub-class must accept a non-optional statistics calculator; then, it must call the __init__ method of the parent class. This ensures that the object is initialized correctly so that the _calculate_summary_stat private method can be called when computing the distances.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

-

loglikelihood(y_obs, y_sim)[source]¶ To be overwritten by any sub-class: should compute the approximate loglikelihood value given the observed data set y_obs and the data set y_sim simulated from model set at the parameter value.

Parameters: - y_obs (Python list) – Observed data set.

- y_sim (Python list) – Simulated data set from model at the parameter value.

Returns: Computed approximate loglikelihood.

Return type: float

-

likelihood(y_obs, y_sim)[source]¶ Computes the likelihood by taking the exponential of the loglikelihood method.

Parameters: - y_obs (Python list) – Observed data set.

- y_sim (Python list) – Simulated data set from model at the parameter value.

Returns: Computed approximate likelihood.

Return type: float

-

-

class

abcpy.approx_lhd.SynLikelihood(statistics_calc)[source]¶ Bases:

abcpy.approx_lhd.Approx_likelihood-

__init__(statistics_calc)[source]¶ This class implements the approximate likelihood function which computes the approximate likelihood using the synthetic likelihood approach described in Wood [1]. For synthetic likelihood approximation, we compute the robust precision matrix using Ledoit and Wolf’s [2] method.

[1] S. N. Wood. Statistical inference for noisy nonlinear ecological dynamic systems. Nature, 466(7310):1102–1104, Aug. 2010.

[2] O. Ledoit and M. Wolf, A Well-Conditioned Estimator for Large-Dimensional Covariance Matrices, Journal of Multivariate Analysis, Volume 88, Issue 2, pages 365-411, February 2004.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

-

-

class

abcpy.approx_lhd.SemiParametricSynLikelihood(statistics_calc, bw_method_marginals='silverman')[source]¶ Bases:

abcpy.approx_lhd.Approx_likelihood-

__init__(statistics_calc, bw_method_marginals='silverman')[source]¶ This class implements the approximate likelihood function which computes the approximate likelihood using the semiparametric Synthetic Likelihood (semiBSL) approach described in [1]. Specifically, this represents the likelihood as a product of univariate marginals and the copula components (exploiting Sklar’s theorem). The marginals are approximated from simulations using a Gaussian KDE, while the copula is assumed to be a Gaussian copula, whose parameters are estimated from data as well.

This does not yet include shrinkage strategies for the correlation matrix.

[1] An, Z., Nott, D. J., & Drovandi, C. (2020). Robust Bayesian synthetic likelihood via a semi-parametric approach. Statistics and Computing, 30(3), 543-557.

Parameters: - statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

- bw_method_marginals (str, scalar or callable, optional) – The method used to calculate the estimator bandwidth, passed to scipy.stats.gaussian_kde. Following the docs of that method, this can be ‘scott’, ‘silverman’, a scalar constant or a callable. If a scalar, this will be used directly as kde.factor. If a callable, it should take a gaussian_kde instance as only parameter and return a scalar. If None (default), ‘silverman’ is used. See the Notes in scipy.stats.gaussian_kde for more details.

-

loglikelihood(y_obs, y_sim)[source]¶ Computes the loglikelihood. This implementation aims to be equivalent to the BSL R package, but the results are slightly different due to small differences in the way the KDE is performed

Parameters: - y_obs (Python list) – Observed data set.

- y_sim (Python list) – Simulated data set from model at the parameter value.

Returns: Computed approximate loglikelihood.

Return type: float

-

-

class

abcpy.approx_lhd.PenLogReg(statistics_calc, model, n_simulate, n_folds=10, max_iter=100000, seed=None)[source]¶ Bases:

abcpy.approx_lhd.Approx_likelihood,abcpy.graphtools.GraphTools-

__init__(statistics_calc, model, n_simulate, n_folds=10, max_iter=100000, seed=None)[source]¶ This class implements the approximate likelihood function which computes the approximate likelihood up to a constant using penalized logistic regression described in Dutta et. al. [1]. It takes one additional function handler defining the true model and two additional parameters n_folds and n_simulate correspondingly defining number of folds used to estimate prediction error using cross-validation and the number of simulated dataset sampled from each parameter to approximate the likelihood function. For lasso penalized logistic regression we use glmnet of Friedman et. al. [2].

[1] Thomas, O., Dutta, R., Corander, J., Kaski, S., & Gutmann, M. U. (2020). Likelihood-free inference by ratio estimation. Bayesian Analysis.

[2] Friedman, J., Hastie, T., and Tibshirani, R. (2010). Regularization paths for generalized linear models via coordinate descent. Journal of Statistical Software, 33(1), 1–22.

Parameters: - statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

- model (abcpy.models.Model) – Model object that conforms to the Model class.

- n_simulate (int) – Number of data points to simulate for the reference data set; this has to be the same as n_samples_per_param when calling the sampler. The reference data set is generated by drawing parameters from the prior and samples from the model when PenLogReg is instantiated.

- n_folds (int, optional) – Number of folds for cross-validation. The default value is 10.

- max_iter (int, optional) – Maximum passes over the data. The default is 100000.

- seed (int, optional) – Seed for the random number generator. The used glmnet solver is not deterministic, this seed is used for determining the cv folds. The default value is None.

-

abcpy.backends module¶

-

class

abcpy.backends.base.Backend[source]¶ Bases:

objectThis is the base class for every parallelization backend. It essentially resembles the map/reduce API from Spark.

An idea for the future is to implement a MPI version of the backend with the hope to be more complient with standard HPC infrastructure and a potential speed-up.

-

parallelize(list)[source]¶ This method distributes the list on the available workers and returns a reference object.

The list should be split into number of workers many parts. Each part should then be sent to a separate worker node.

Parameters: list (Python list) – the list that should get distributed on the worker nodes Returns: A reference object that represents the parallelized list Return type: PDS class (parallel data set)

-

broadcast(object)[source]¶ Send object to all worker nodes without splitting it up.

Parameters: object (Python object) – An abitrary object that should be available on all workers Returns: A reference to the broadcasted object Return type: BDS class (broadcast data set)

-

map(func, pds)[source]¶ A distributed implementation of map that works on parallel data sets (PDS).

On every element of pds the function func is called.

Parameters: - func (Python func) – A function that can be applied to every element of the pds

- pds (PDS class) – A parallel data set to which func should be applied

Returns: a new parallel data set that contains the result of the map

Return type: PDS class

-

-

class

abcpy.backends.base.PDS[source]¶ Bases:

objectThe reference class for parallel data sets (PDS).

-

class

abcpy.backends.base.BDS[source]¶ Bases:

objectThe reference class for broadcast data set (BDS).

-

class

abcpy.backends.base.BackendDummy[source]¶ Bases:

abcpy.backends.base.BackendThis is a dummy parallelization backend, meaning it doesn’t parallelize anything. It is mainly implemented for testing purpose.

-

parallelize(python_list)[source]¶ This actually does nothing: it just wraps the Python list into dummy pds (PDSDummy).

Parameters: python_list (Python list) – Returns: Return type: PDSDummy (parallel data set)

-

broadcast(object)[source]¶ This actually does nothing: it just wraps the object into BDSDummy.

Parameters: object (Python object) – Returns: Return type: BDSDummy class

-

map(func, pds)[source]¶ This is a wrapper for the Python internal map function.

Parameters: - func (Python func) – A function that can be applied to every element of the pds

- pds (PDSDummy class) – A pseudo-parallel data set to which func should be applied

Returns: a new pseudo-parallel data set that contains the result of the map

Return type: PDSDummy class

-

-

class

abcpy.backends.base.PDSDummy(python_list)[source]¶ Bases:

abcpy.backends.base.PDSThis is a wrapper for a Python list to fake parallelization.

-

class

abcpy.backends.base.BDSDummy(object)[source]¶ Bases:

abcpy.backends.base.BDSThis is a wrapper for a Python object to fake parallelization.

-

class

abcpy.backends.spark.BackendSpark(sparkContext, parallelism=4)[source]¶ Bases:

abcpy.backends.base.BackendA parallelization backend for Apache Spark. It is essetially a wrapper for the required Spark functionality.

-

__init__(sparkContext, parallelism=4)[source]¶ Initialize the backend with an existing and configured SparkContext.

Parameters: - sparkContext (pyspark.SparkContext) – an existing and fully configured PySpark context

- parallelism (int) – defines on how many workers a distributed dataset can be distributed

-

parallelize(python_list)[source]¶ This is a wrapper of pyspark.SparkContext.parallelize().

Parameters: list (Python list) – list that is distributed on the workers Returns: A reference object that represents the parallelized list Return type: PDSSpark class (parallel data set)

-

broadcast(object)[source]¶ This is a wrapper for pyspark.SparkContext.broadcast().

Parameters: object (Python object) – An abitrary object that should be available on all workers Returns: A reference to the broadcasted object Return type: BDSSpark class (broadcast data set)

-

map(func, pds)[source]¶ This is a wrapper for pyspark.rdd.map()

Parameters: - func (Python func) – A function that can be applied to every element of the pds

- pds (PDSSpark class) – A parallel data set to which func should be applied

Returns: a new parallel data set that contains the result of the map

Return type: PDSSpark class

-

-

class

abcpy.backends.spark.PDSSpark(rdd)[source]¶ Bases:

abcpy.backends.base.PDSThis is a wrapper for Apache Spark RDDs.

-

class

abcpy.backends.spark.BDSSpark(bcv)[source]¶ Bases:

abcpy.backends.base.BDSThis is a wrapper for Apache Spark Broadcast variables.

abcpy.continuousmodels module¶

-

class

abcpy.continuousmodels.Uniform(parameters, name='Uniform')[source]¶ Bases:

abcpy.probabilisticmodels.ProbabilisticModel,abcpy.probabilisticmodels.Continuous-

__init__(parameters, name='Uniform')[source]¶ This class implements a probabilistic model following an uniform distribution.

Parameters: - parameters (list) – Contains two lists. The first list specifies the probabilistic models and hyperparameters from which the lower bound of the uniform distribution derive. The second list specifies the probabilistic models and hyperparameters from which the upper bound derives.

- name (string, optional) – The name that should be given to the probabilistic model in the journal file.

-

forward_simulate(input_values, k, rng=<MagicMock name='mock.RandomState()' id='140064215897104'>, mpi_comm=None)[source]¶ Samples from a uniform distribution using the current values for each probabilistic model from which the model derives.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- k (integer) – The number of samples that should be drawn.

- rng (Random number generator) – Defines the random number generator to be used. The default value uses a random seed to initialize the generator.

Returns: list – A list containing the sampled values as np-array.

Return type: [np.ndarray]

-

get_output_dimension()[source]¶ Provides the output dimension of the current model.

This function is in particular important if the current model is used as an input for other models. In such a case it is assumed that the output is always a vector of int or float. The length of the vector is the dimension that should be returned here.

Returns: The dimension of the output vector of a single forward simulation. Return type: int

-

pdf(input_values, x)[source]¶ Calculates the probability density function at point x. Commonly used to determine whether perturbed parameters are still valid according to the pdf.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- x (list) – The point at which the pdf should be evaluated.

Returns: The evaluated pdf at point x.

Return type: Float

-

-

class

abcpy.continuousmodels.Normal(parameters, name='Normal')[source]¶ Bases:

abcpy.probabilisticmodels.ProbabilisticModel,abcpy.probabilisticmodels.Continuous-

__init__(parameters, name='Normal')[source]¶ This class implements a probabilistic model following a normal distribution with mean mu and variance sigma.

Parameters: - parameters (list) – Contains the probabilistic models and hyperparameters from which the model derives. The list has two entries: from the first entry mean of the distribution and from the second entry variance is derived. Note that the second value of the list is strictly greater than 0.

- name (string) – The name that should be given to the probabilistic model in the journal file.

-

forward_simulate(input_values, k, rng=<MagicMock name='mock.RandomState()' id='140064215472144'>, mpi_comm=None)[source]¶ Samples from a normal distribution using the current values for each probabilistic model from which the model derives.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- k (integer) – The number of samples that should be drawn.

- rng (Random number generator) – Defines the random number generator to be used. The default value uses a random seed to initialize the generator.

Returns: list – A list containing the sampled values as np-array.

Return type: [np.ndarray]

-

get_output_dimension()[source]¶ Provides the output dimension of the current model.

This function is in particular important if the current model is used as an input for other models. In such a case it is assumed that the output is always a vector of int or float. The length of the vector is the dimension that should be returned here.

Returns: The dimension of the output vector of a single forward simulation. Return type: int

-

pdf(input_values, x)[source]¶ Calculates the probability density function at point x. Commonly used to determine whether perturbed parameters are still valid according to the pdf.

Parameters: - input_values (list) – List of input parameters of the from [mu, sigma]

- x (list) – The point at which the pdf should be evaluated.

Returns: The evaluated pdf at point x.

Return type: Float

-

-

class

abcpy.continuousmodels.StudentT(parameters, name='StudentT')[source]¶ Bases:

abcpy.probabilisticmodels.ProbabilisticModel,abcpy.probabilisticmodels.Continuous-

__init__(parameters, name='StudentT')[source]¶ This class implements a probabilistic model following the Student’s T-distribution.

Parameters: - parameters (list) – Contains the probabilistic models and hyperparameters from which the model derives. The list has two entries: from the first entry mean of the distribution and from the second entry degrees of freedom is derived. Note that the second value of the list is strictly greater than 0.

- name (string) – The name that should be given to the probabilistic model in the journal file.

-

forward_simulate(input_values, k, rng=<MagicMock name='mock.RandomState()' id='140064215109968'>, mpi_comm=None)[source]¶ Samples from a Student’s T-distribution using the current values for each probabilistic model from which the model derives.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- k (integer) – The number of samples that should be drawn.

- rng (Random number generator) – Defines the random number generator to be used. The default value uses a random seed to initialize the generator.

Returns: list – A list containing the sampled values as np-array.

Return type: [np.ndarray]

-

get_output_dimension()[source]¶ Provides the output dimension of the current model.

This function is in particular important if the current model is used as an input for other models. In such a case it is assumed that the output is always a vector of int or float. The length of the vector is the dimension that should be returned here.

Returns: The dimension of the output vector of a single forward simulation. Return type: int

-

pdf(input_values, x)[source]¶ Calculates the probability density function at point x. Commonly used to determine whether perturbed parameters are still valid according to the pdf.

Parameters: - input_values (list) – List of input parameters

- x (list) – The point at which the pdf should be evaluated.

Returns: The evaluated pdf at point x.

Return type: Float

-

-

class

abcpy.continuousmodels.MultivariateNormal(parameters, name='Multivariate Normal')[source]¶ Bases:

abcpy.probabilisticmodels.ProbabilisticModel,abcpy.probabilisticmodels.Continuous-

__init__(parameters, name='Multivariate Normal')[source]¶ This class implements a probabilistic model following a multivariate normal distribution with mean and covariance matrix.

Parameters: - parameters (list of at length 2) – Contains the probabilistic models and hyperparameters from which the model derives. The first entry defines the mean, while the second entry defines the Covariance matrix. Note that if the mean is n dimensional, the covariance matrix is required to be of dimension nxn, symmetric and positive-definite.

- name (string) – The name that should be given to the probabilistic model in the journal file.

-

forward_simulate(input_values, k, rng=<MagicMock name='mock.RandomState()' id='140064215214672'>, mpi_comm=None)[source]¶ Samples from a multivariate normal distribution using the current values for each probabilistic model from which the model derives.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- k (integer) – The number of samples that should be drawn.

- rng (Random number generator) – Defines the random number generator to be used. The default value uses a random seed to initialize the generator.

Returns: list – A list containing the sampled values as np-array.

Return type: [np.ndarray]

-

get_output_dimension()[source]¶ Provides the output dimension of the current model.

This function is in particular important if the current model is used as an input for other models. In such a case it is assumed that the output is always a vector of int or float. The length of the vector is the dimension that should be returned here.

Returns: The dimension of the output vector of a single forward simulation. Return type: int

-

pdf(input_values, x)[source]¶ Calculates the probability density function at point x. Commonly used to determine whether perturbed parameters are still valid according to the pdf.

Parameters: - input_values (list) – List of input parameters

- x (list) – The point at which the pdf should be evaluated.

Returns: The evaluated pdf at point x.

Return type: Float

-

-

class

abcpy.continuousmodels.MultiStudentT(parameters, name='MultiStudentT')[source]¶ Bases:

abcpy.probabilisticmodels.ProbabilisticModel,abcpy.probabilisticmodels.Continuous-

__init__(parameters, name='MultiStudentT')[source]¶ This class implements a probabilistic model following the multivariate Student-T distribution.

Parameters: - parameters (list) – All but the last two entries contain the probabilistic models and hyperparameters from which the model derives. The second to last entry contains the covariance matrix. If the mean is of dimension n, the covariance matrix is required to be nxn dimensional. The last entry contains the degrees of freedom.

- name (string) – The name that should be given to the probabilistic model in the journal file.

-

forward_simulate(input_values, k, rng=<MagicMock name='mock.RandomState()' id='140064218781392'>, mpi_comm=None)[source]¶ Samples from a multivariate Student’s T-distribution using the current values for each probabilistic model from which the model derives.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- k (integer) – The number of samples that should be drawn.

- rng (Random number generator) – Defines the random number generator to be used. The default value uses a random seed to initialize the generator.

Returns: list – A list containing the sampled values as np-array.

Return type: [np.ndarray]

-

get_output_dimension()[source]¶ Provides the output dimension of the current model.

This function is in particular important if the current model is used as an input for other models. In such a case it is assumed that the output is always a vector of int or float. The length of the vector is the dimension that should be returned here.

Returns: The dimension of the output vector of a single forward simulation. Return type: int

-

pdf(input_values, x)[source]¶ Calculates the probability density function at point x. Commonly used to determine whether perturbed parameters are still valid according to the pdf.

Parameters: - input_values (list) – List of input parameters

- x (list) – The point at which the pdf should be evaluated.

Returns: The evaluated pdf at point x.

Return type: Float

-

-

class

abcpy.continuousmodels.LogNormal(parameters, name='LogNormal')[source]¶ Bases:

abcpy.probabilisticmodels.ProbabilisticModel,abcpy.probabilisticmodels.Continuous-

__init__(parameters, name='LogNormal')[source]¶ This class implements a probabilistic model following a Lognormal distribution with mean mu and variance sigma.

Parameters: - parameters (list) – Contains the probabilistic models and hyperparameters from which the model derives. The list has two entries: from the first entry mean of the underlying normal distribution and from the second entry variance of the underlying normal distribution is derived. Note that the second value of the list is strictly greater than 0.

- name (string) – The name that should be given to the probabilistic model in the journal file.

-

forward_simulate(input_values, k, rng=<MagicMock name='mock.RandomState()' id='140064215272848'>, mpi_comm=None)[source]¶ Samples from a normal distribution using the current values for each probabilistic model from which the model derives.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- k (integer) – The number of samples that should be drawn.

- rng (Random number generator) – Defines the random number generator to be used. The default value uses a random seed to initialize the generator.

Returns: list – A list containing the sampled values as np-array.

Return type: [np.ndarray]

-

get_output_dimension()[source]¶ Provides the output dimension of the current model.

This function is in particular important if the current model is used as an input for other models. In such a case it is assumed that the output is always a vector of int or float. The length of the vector is the dimension that should be returned here.

Returns: The dimension of the output vector of a single forward simulation. Return type: int

-

pdf(input_values, x)[source]¶ Calculates the probability density function at point x. Commonly used to determine whether perturbed parameters are still valid according to the pdf.

Parameters: - input_values (list) – List of input parameters of the from [mu, sigma]

- x (list) – The point at which the pdf should be evaluated.

Returns: The evaluated pdf at point x.

Return type: Float

-

-

class

abcpy.continuousmodels.Exponential(parameters, name='Exponential')[source]¶ Bases:

abcpy.probabilisticmodels.ProbabilisticModel,abcpy.probabilisticmodels.Continuous-

__init__(parameters, name='Exponential')[source]¶ This class implements a probabilistic model following a normal distribution with mean mu and variance sigma.

Parameters: - parameters (list) – Contains the probabilistic models and hyperparameters from which the model derives. The list has one entry: the rate \(\lambda\) of the exponential distribution, that has therefore pdf: \(f(x; \lambda) = \lambda \exp(-\lambda x )\)

- name (string) – The name that should be given to the probabilistic model in the journal file.

-

forward_simulate(input_values, k, rng=<MagicMock name='mock.RandomState()' id='140064215316432'>, mpi_comm=None)[source]¶ Samples from a normal distribution using the current values for each probabilistic model from which the model derives.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- k (integer) – The number of samples that should be drawn.

- rng (Random number generator) – Defines the random number generator to be used. The default value uses a random seed to initialize the generator.

Returns: list – A list containing the sampled values as np-array.

Return type: [np.ndarray]

-

get_output_dimension()[source]¶ Provides the output dimension of the current model.

This function is in particular important if the current model is used as an input for other models. In such a case it is assumed that the output is always a vector of int or float. The length of the vector is the dimension that should be returned here.

Returns: The dimension of the output vector of a single forward simulation. Return type: int

-

pdf(input_values, x)[source]¶ Calculates the probability density function at point x. Commonly used to determine whether perturbed parameters are still valid according to the pdf.

Parameters: - input_values (list) – List of input parameters of the from [rate]

- x (list) – The point at which the pdf should be evaluated.

Returns: The evaluated pdf at point x.

Return type: Float

-

abcpy.discretemodels module¶

-

class

abcpy.discretemodels.Bernoulli(parameters, name='Bernoulli')[source]¶ Bases:

abcpy.probabilisticmodels.Discrete,abcpy.probabilisticmodels.ProbabilisticModel-

__init__(parameters, name='Bernoulli')[source]¶ This class implements a probabilistic model following a bernoulli distribution.

Parameters: - parameters (list) – A list containing one entry, the probability of the distribution.

- name (string) – The name that should be given to the probabilistic model in the journal file.

-

forward_simulate(input_values, k, rng=<MagicMock name='mock.RandomState()' id='140064212776272'>, mpi_comm=None)[source]¶ Samples from the bernoulli distribution associtated with the probabilistic model.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- k (integer) – The number of samples to be drawn.

- rng (random number generator) – The random number generator to be used.

Returns: list – A list containing the sampled values as np-array.

Return type: [np.ndarray]

-

get_output_dimension()[source]¶ Provides the output dimension of the current model.

This function is in particular important if the current model is used as an input for other models. In such a case it is assumed that the output is always a vector of int or float. The length of the vector is the dimension that should be returned here.

Returns: The dimension of the output vector of a single forward simulation. Return type: int

-

pmf(input_values, x)[source]¶ Evaluates the probability mass function at point x.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- x (float) – The point at which the pmf should be evaluated.

Returns: The pmf evaluated at point x.

Return type: float

-

-

class

abcpy.discretemodels.Binomial(parameters, name='Binomial')[source]¶ Bases:

abcpy.probabilisticmodels.Discrete,abcpy.probabilisticmodels.ProbabilisticModel-

__init__(parameters, name='Binomial')[source]¶ This class implements a probabilistic model following a binomial distribution.

Parameters: - parameters (list) – Contains the probabilistic models and hyperparameters from which the model derives. Note that the first entry of the list, n, an integer and has to be larger than or equal to 0, while the second entry, p, has to be in the interval [0,1].

- name (string) – The name that should be given to the probabilistic model in the journal file.

-

forward_simulate(input_values, k, rng=<MagicMock name='mock.RandomState()' id='140064212611792'>, mpi_comm=None)[source]¶ Samples from a binomial distribution using the current values for each probabilistic model from which the model derives.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- k (integer) – The number of samples that should be drawn.

- rng (Random number generator) – Defines the random number generator to be used. The default value uses a random seed to initialize the generator.

Returns: list – A list containing the sampled values as np-array.

Return type: [np.ndarray]

-

get_output_dimension()[source]¶ Provides the output dimension of the current model.

This function is in particular important if the current model is used as an input for other models. In such a case it is assumed that the output is always a vector of int or float. The length of the vector is the dimension that should be returned here.

Returns: The dimension of the output vector of a single forward simulation. Return type: int

-

pmf(input_values, x)[source]¶ Calculates the probability mass function at point x.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- x (list) – The point at which the pmf should be evaluated.

Returns: The evaluated pmf at point x.

Return type: Float

-

-

class

abcpy.discretemodels.Poisson(parameters, name='Poisson')[source]¶ Bases:

abcpy.probabilisticmodels.Discrete,abcpy.probabilisticmodels.ProbabilisticModel-

__init__(parameters, name='Poisson')[source]¶ This class implements a probabilistic model following a poisson distribution.

Parameters: - parameters (list) – A list containing one entry, the mean of the distribution.

- name (string) – The name that should be given to the probabilistic model in the journal file.

-

forward_simulate(input_values, k, rng=<MagicMock name='mock.RandomState()' id='140064212634640'>, mpi_comm=None)[source]¶ Samples k values from the defined possion distribution.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- k (integer) – The number of samples.

- rng (random number generator) – The random number generator to be used.

Returns: list – A list containing the sampled values as np-array.

Return type: [np.ndarray]

-

get_output_dimension()[source]¶ Provides the output dimension of the current model.

This function is in particular important if the current model is used as an input for other models. In such a case it is assumed that the output is always a vector of int or float. The length of the vector is the dimension that should be returned here.

Returns: The dimension of the output vector of a single forward simulation. Return type: int

-

pmf(input_values, x)[source]¶ Calculates the probability mass function of the distribution at point x.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- x (integer) – The point at which the pmf should be evaluated.

Returns: The evaluated pmf at point x.

Return type: Float

-

-

class

abcpy.discretemodels.DiscreteUniform(parameters, name='DiscreteUniform')[source]¶ Bases:

abcpy.probabilisticmodels.Discrete,abcpy.probabilisticmodels.ProbabilisticModel-

__init__(parameters, name='DiscreteUniform')[source]¶ This class implements a probabilistic model following a Discrete Uniform distribution.

Parameters: - parameters (list) – A list containing two entries, the upper and lower bound of the range.

- name (string) – The name that should be given to the probabilistic model in the journal file.

-

forward_simulate(input_values, k, rng=<MagicMock name='mock.RandomState()' id='140064214748112'>)[source]¶ Samples from the Discrete Uniform distribution associated with the probabilistic model.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- k (integer) – The number of samples to be drawn.

- rng (random number generator) – The random number generator to be used.

Returns: list – A list containing the sampled values as np-array.

Return type: [np.ndarray]

-

get_output_dimension()[source]¶ Provides the output dimension of the current model.

This function is in particular important if the current model is used as an input for other models. In such a case it is assumed that the output is always a vector of int or float. The length of the vector is the dimension that should be returned here.

Returns: The dimension of the output vector of a single forward simulation. Return type: int

-

pmf(input_values, x)[source]¶ Evaluates the probability mass function at point x.

Parameters: - input_values (list) – List of input parameters, in the same order as specified in the InputConnector passed to the init function

- x (float) – The point at which the pmf should be evaluated.

Returns: The pmf evaluated at point x.

Return type: float

-

abcpy.distances module¶

-

class

abcpy.distances.Distance(statistics_calc)[source]¶ Bases:

objectThis abstract base class defines how the distance between the observed and simulated data should be implemented.

-

__init__(statistics_calc)[source]¶ The constructor of a sub-class must accept a non-optional statistics calculator as a parameter; then, it must call the __init__ method of the parent class. This ensures that the object is initialized correctly so that the _calculate_summary_stat private method can be called when computing the distances.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

-

distance(d1, d2)[source]¶ To be overwritten by any sub-class: should calculate the distance between two sets of data d1 and d2 using their respective statistics.

Usually, calling the _calculate_summary_stat private method to obtain statistics from the datasets is handy; that also keeps track of the first provided dataset (which is the observation in ABCpy inference schemes) and avoids computing the statistics for that multiple times.

Notes

The data sets d1 and d2 are array-like structures that contain n1 and n2 data points each. An implementation of the distance function should work along the following steps:

1. Transform both input sets dX = [ dX1, dX2, …, dXn ] to sX = [sX1, sX2, …, sXn] using the statistics object. See _calculate_summary_stat method.

2. Calculate the mutual desired distance, here denoted by - between the statistics; for instance, dist = [s11 - s21, s12 - s22, …, s1n - s2n] (in some cases however you may want to compute all pairwise distances between statistics elements.

Important: any sub-class must not calculate the distance between data sets d1 and d2 directly. This is the reason why any sub-class must be initialized with a statistics object.

Parameters: - d1 (Python list) – Contains n1 data points.

- d2 (Python list) – Contains n2 data points.

Returns: The distance between the two input data sets.

Return type: numpy.float

-

dist_max()[source]¶ To be overwritten by sub-class: should return maximum possible value of the desired distance function.

Examples

If the desired distance maps to \(\mathbb{R}\), this method should return numpy.inf.

Returns: The maximal possible value of the desired distance function. Return type: numpy.float

-

-

class

abcpy.distances.Divergence(statistics_calc)[source]¶ Bases:

abcpy.distances.DistanceThis is an abstract class which subclasses Distance, and is used as a parent class for all divergence estimators; more specifically, it is used for all Distances which compare the empirical distribution of simulations and observations.

-

class

abcpy.distances.Euclidean(statistics_calc)[source]¶ Bases:

abcpy.distances.DistanceThis class implements the Euclidean distance between two vectors.

The maximum value of the distance is np.inf.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class. -

__init__(statistics_calc)[source]¶ The constructor of a sub-class must accept a non-optional statistics calculator as a parameter; then, it must call the __init__ method of the parent class. This ensures that the object is initialized correctly so that the _calculate_summary_stat private method can be called when computing the distances.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

-

distance(d1, d2)[source]¶ Calculates the distance between two datasets, by computing Euclidean distance between each element of d1 and d2 and taking their average.

Parameters: - d1 (Python list) – Contains n1 data points.

- d2 (Python list) – Contains n2 data points.

Returns: The distance between the two input data sets.

Return type: numpy.float

-

-

class

abcpy.distances.PenLogReg(statistics_calc)[source]¶ Bases:

abcpy.distances.DivergenceThis class implements a distance measure based on the classification accuracy.

The classification accuracy is calculated between two dataset d1 and d2 using lasso penalized logistics regression and return it as a distance. The lasso penalized logistic regression is done using glmnet package of Friedman et. al. [2]. While computing the distance, the algorithm automatically chooses the most relevant summary statistics as explained in Gutmann et. al. [1]. The maximum value of the distance is 1.0.

[1] Gutmann, M. U., Dutta, R., Kaski, S., & Corander, J. (2018). Likelihood-free inference via classification. Statistics and Computing, 28(2), 411-425.

[2] Friedman, J., Hastie, T., and Tibshirani, R. (2010). Regularization paths for generalized linear models via coordinate descent. Journal of Statistical Software, 33(1), 1–22.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class. -

__init__(statistics_calc)[source]¶ The constructor of a sub-class must accept a non-optional statistics calculator as a parameter; then, it must call the __init__ method of the parent class. This ensures that the object is initialized correctly so that the _calculate_summary_stat private method can be called when computing the distances.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

-

-

class

abcpy.distances.LogReg(statistics_calc, seed=None)[source]¶ Bases:

abcpy.distances.DivergenceThis class implements a distance measure based on the classification accuracy [1]. The classification accuracy is calculated between two dataset d1 and d2 using logistics regression and return it as a distance. The maximum value of the distance is 1.0. The logistic regression may not converge when using one single sample in each dataset (as for instance by putting n_samples_per_param=1 in an inference routine).

[1] Gutmann, M. U., Dutta, R., Kaski, S., & Corander, J. (2018). Likelihood-free inference via classification. Statistics and Computing, 28(2), 411-425.

Parameters: - statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

- seed (integer, optionl) – Seed used to initialize the Random Numbers Generator used to determine the (random) cross validation split in the Logistic Regression classifier.

-

__init__(statistics_calc, seed=None)[source]¶ The constructor of a sub-class must accept a non-optional statistics calculator as a parameter; then, it must call the __init__ method of the parent class. This ensures that the object is initialized correctly so that the _calculate_summary_stat private method can be called when computing the distances.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

-

class

abcpy.distances.Wasserstein(statistics_calc, num_iter_max=100000)[source]¶ Bases:

abcpy.distances.DivergenceThis class implements a distance measure based on the 2-Wasserstein distance, as used in [1]. This considers the several simulations/observations in the datasets as iid samples from the model for a fixed parameter value/from the data generating model, and computes the 2-Wasserstein distance between the empirical distributions those simulations/observations define.

[1] Bernton, E., Jacob, P.E., Gerber, M. and Robert, C.P. (2019), Approximate Bayesian computation with the Wasserstein distance. J. R. Stat. Soc. B, 81: 235-269. doi:10.1111/rssb.12312

Parameters: - statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

- num_iter_max (integer, optional) – The maximum number of iterations in the linear programming algorithm to estimate the Wasserstein distance. Default to 100000.

-

__init__(statistics_calc, num_iter_max=100000)[source]¶ The constructor of a sub-class must accept a non-optional statistics calculator as a parameter; then, it must call the __init__ method of the parent class. This ensures that the object is initialized correctly so that the _calculate_summary_stat private method can be called when computing the distances.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

-

class

abcpy.distances.SlicedWasserstein(statistics_calc, n_projections=50, rng=<MagicMock name='mock.RandomState()' id='140064212041424'>)[source]¶ Bases:

abcpy.distances.DivergenceThis class implements a distance measure based on the sliced 2-Wasserstein distance, as used in [1]. This considers the several simulations/observations in the datasets as iid samples from the model for a fixed parameter value/from the data generating model, and computes the sliced 2-Wasserstein distance between the empirical distributions those simulations/observations define. Specifically, the sliced Wasserstein distance is a cheaper version of the Wasserstein distance which consists of projecting the multivariate data on 1d directions and computing the 1d Wasserstein distance, which is computationally cheap. The resulting sliced Wasserstein distance is obtained by averaging over a given number of projections.

[1] Nadjahi, K., De Bortoli, V., Durmus, A., Badeau, R., & Şimşekli, U. (2020, May). Approximate bayesian computation with the sliced-wasserstein distance. In ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 5470-5474). IEEE.

Parameters: - statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

- n_projections (int, optional) – Number of 1d projections used for estimating the sliced Wasserstein distance. Default value is 50.

- rng (np.random.RandomState, optional) – random number generators used to generate the projections. If not provided, a new one is instantiated.

-

__init__(statistics_calc, n_projections=50, rng=<MagicMock name='mock.RandomState()' id='140064212041424'>)[source]¶ The constructor of a sub-class must accept a non-optional statistics calculator as a parameter; then, it must call the __init__ method of the parent class. This ensures that the object is initialized correctly so that the _calculate_summary_stat private method can be called when computing the distances.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

-

distance(d1, d2)[source]¶ Calculates the distance between two datasets.

Parameters: - d1 (Python list) – Contains n1 data points.

- d2 (Python list) – Contains n2 data points.

Returns: The distance between the two input data sets.

Return type: numpy.float

-

dist_max()[source]¶ Returns: The maximal possible value of the desired distance function. Return type: numpy.float

-

static

get_random_projections(n_projections, d, seed=None)[source]¶ Taken from

https://github.com/PythonOT/POT/blob/78b44af2434f494c8f9e4c8c91003fbc0e1d4415/ot/sliced.py

Author: Adrien Corenflos <adrien.corenflos@aalto.fi>

License: MIT License

Generates n_projections samples from the uniform on the unit sphere of dimension d-1: \(\mathcal{U}(\mathcal{S}^{d-1})\)

Parameters: - n_projections (int) – number of samples requested

- d (int) – dimension of the space

- seed (int or RandomState, optional) – Seed used for numpy random number generator

Returns: out – The uniform unit vectors on the sphere

Return type: ndarray, shape (n_projections, d)

Examples

>>> n_projections = 100 >>> d = 5 >>> projs = get_random_projections(n_projections, d) >>> np.allclose(np.sum(np.square(projs), 1), 1.) # doctest: +NORMALIZE_WHITESPACE True

-

sliced_wasserstein_distance(X_s, X_t, a=None, b=None, n_projections=50, seed=None, log=False)[source]¶ Taken from

https://github.com/PythonOT/POT/blob/78b44af2434f494c8f9e4c8c91003fbc0e1d4415/ot/sliced.py

Author: Adrien Corenflos <adrien.corenflos@aalto.fi>

License: MIT License

Computes a Monte-Carlo approximation of the 2-Sliced Wasserstein distance \(\mathcal{SWD}_2(\mu, \nu) = \underset{\theta \sim \mathcal{U}(\mathbb{S}^{d-1})}{\mathbb{E}}[\mathcal{W}_2^2(\theta_\# \mu, \theta_\# \nu)]^{\frac{1}{2}}\) where \(\theta_\# \mu\) stands for the pushforwars of the projection \(\mathbb{R}^d \ni X \mapsto \langle \theta, X \rangle\)

Parameters: - X_s (ndarray, shape (n_samples_a, dim)) – samples in the source domain

- X_t (ndarray, shape (n_samples_b, dim)) – samples in the target domain

- a (ndarray, shape (n_samples_a,), optional) – samples weights in the source domain

- b (ndarray, shape (n_samples_b,), optional) – samples weights in the target domain

- n_projections (int, optional) – Number of projections used for the Monte-Carlo approximation

- seed (int or RandomState or None, optional) – Seed used for numpy random number generator

- log (bool, optional) – if True, sliced_wasserstein_distance returns the projections used and their associated EMD.

Returns: - cost (float) – Sliced Wasserstein Cost

- log (dict, optional) – log dictionary return only if log==True in parameters

Examples

>>> n_samples_a = 20 >>> reg = 0.1 >>> X = np.random.normal(0., 1., (n_samples_a, 5)) >>> sliced_wasserstein_distance(X, X, seed=0) # doctest: +NORMALIZE_WHITESPACE 0.0

References

Bonneel, Nicolas, et al. “Sliced and radon wasserstein barycenters of measures.” Journal of Mathematical Imaging and Vision 51.1 (2015): 22-45

-

class

abcpy.distances.GammaDivergence(statistics_calc, k=1, gam=0.1)[source]¶ Bases:

abcpy.distances.DivergenceThis implements an empirical estimator of the gamma-divergence for ABC as suggested in [1]. In [1], the gamma-divergence was proposed as a divergence which is robust to outliers. The estimator is based on a nearest neighbor density estimate. Specifically, this considers the several simulations/observations in the datasets as iid samples from the model for a fixed parameter value/from the data generating model, and estimates the divergence between the empirical distributions those simulations/observations define.

[1] Fujisawa, M., Teshima, T., Sato, I., & Sugiyama, M. γ-ABC: Outlier-robust approximate Bayesian computation based on a robust divergence estimator. In A. Banerjee and K. Fukumizu (Eds.), Proceedings of 24th International Conference on Artificial Intelligence and Statistics (AISTATS2021), Proceedings of Machine Learning Research, vol.130, pp.1783-1791, online, Apr. 13-15, 2021.

Parameters: - statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

- k (int, optional) – nearest neighbor number for the density estimate. Default value is 1

- gam (float, optional) – the gamma parameter in the definition of the divergence. Default value is 0.1

-

__init__(statistics_calc, k=1, gam=0.1)[source]¶ The constructor of a sub-class must accept a non-optional statistics calculator as a parameter; then, it must call the __init__ method of the parent class. This ensures that the object is initialized correctly so that the _calculate_summary_stat private method can be called when computing the distances.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

-

distance(d1, d2)[source]¶ Calculates the distance between two datasets.

Parameters: - d1 (Python list) – Contains n1 data points.

- d2 (Python list) – Contains n2 data points.

Returns: The distance between the two input data sets.

Return type: numpy.float

-

dist_max()[source]¶ Returns: The maximal possible value of the desired distance function. Return type: numpy.float

-

static

skl_estimator_gamma_q(s1, s2, k=1, gam=0.1)[source]¶ Gamma-Divergence estimator using scikit-learn’s NearestNeighbours s1: (N_1,D) Sample drawn from distribution P s2: (N_2,D) Sample drawn from distribution Q k: Number of neighbours considered (default 1) return: estimated D(P|Q)

Adapted from code provided by Masahiro Fujisawa (University of Tokyo / RIKEN AIP)

-

class

abcpy.distances.KLDivergence(statistics_calc, k=1)[source]¶ Bases:

abcpy.distances.DivergenceThis implements an empirical estimator of the KL divergence for ABC as suggested in [1]. The estimator is based on a nearest neighbor density estimate. Specifically, this considers the several simulations/observations in the datasets as iid samples from the model for a fixed parameter value/from the data generating model, and estimates the divergence between the empirical distributions those simulations/observations define.

[1] Jiang, B. (2018, March). Approximate Bayesian computation with Kullback-Leibler divergence as data discrepancy. In International Conference on Artificial Intelligence and Statistics (pp. 1711-1721). PMLR.

Parameters: - statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

- k (int, optional) – nearest neighbor number for the density estimate. Default value is 1

-

__init__(statistics_calc, k=1)[source]¶ The constructor of a sub-class must accept a non-optional statistics calculator as a parameter; then, it must call the __init__ method of the parent class. This ensures that the object is initialized correctly so that the _calculate_summary_stat private method can be called when computing the distances.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

-

distance(d1, d2)[source]¶ Calculates the distance between two datasets.

Parameters: - d1 (Python list) – Contains n1 data points.

- d2 (Python list) – Contains n2 data points.

Returns: The distance between the two input data sets.

Return type: numpy.float

-

dist_max()[source]¶ Returns: The maximal possible value of the desired distance function. Return type: numpy.float

-

class

abcpy.distances.MMD(statistics_calc, kernel='gaussian', biased_estimator=False, **kernel_kwargs)[source]¶ Bases:

abcpy.distances.DivergenceThis implements an empirical estimator of the MMD for ABC as suggested in [1]. This class implements a gaussian kernel by default but allows specifying different kernel functions. Notice that the original version in [1] suggested an unbiased estimate, which however can return negative values. We also provide a biased but provably positive estimator following the remarks in [2]. Specifically, this considers the several simulations/observations in the datasets as iid samples from the model for a fixed parameter value/from the data generating model, and estimates the MMD between the empirical distributions those simulations/observations define.

[1] Park, M., Jitkrittum, W., & Sejdinovic, D. (2016, May). K2-ABC: Approximate Bayesian computation with kernel embeddings. In Artificial Intelligence and Statistics (pp. 398-407). PMLR. [2] Nguyen, H. D., Arbel, J., Lü, H., & Forbes, F. (2020). Approximate Bayesian computation via the energy statistic. IEEE Access, 8, 131683-131698.

Parameters: - statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

- kernel (str or callable) – Can be a string denoting the kernel, or a function. If a string, only gaussian is implemented for now; in that case, you can also provide an additional keyword parameter ‘sigma’ which is used as the sigma in the kernel. Default is the gaussian kernel.

- biased_estimator (boolean, optional) – Whether to use the biased (but always positive) or unbiased estimator; by default, it uses the biased one.

- kernel_kwargs – Additional keyword arguments to be passed to the distance calculator.

-

__init__(statistics_calc, kernel='gaussian', biased_estimator=False, **kernel_kwargs)[source]¶ The constructor of a sub-class must accept a non-optional statistics calculator as a parameter; then, it must call the __init__ method of the parent class. This ensures that the object is initialized correctly so that the _calculate_summary_stat private method can be called when computing the distances.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

-

distance(d1, d2)[source]¶ Calculates the distance between two datasets.

Parameters: - d1 (Python list) – Contains n1 data points.

- d2 (Python list) – Contains n2 data points.

Returns: The distance between the two input data sets.

Return type: numpy.float

-

class

abcpy.distances.EnergyDistance(statistics_calc, base_distance='Euclidean', biased_estimator=True, **base_distance_kwargs)[source]¶ Bases:

abcpy.distances.MMDThis implements an empirical estimator of the Energy Distance for ABC as suggested in [1]. This class uses the Euclidean distance by default as a base distance, but allows to pass different distances. Moreover, when the Euclidean distance is specified, it is possible to pass an additional keyword argument beta which denotes the power of the distance to consider. In [1], the authors suggest to use a biased but provably positive estimator; we also provide an unbiased estimate, which however can return negative values. Specifically, this considers the several simulations/observations in the datasets as iid samples from the model for a fixed parameter value/from the data generating model, and estimates the MMD between the empirical distributions those simulations/observations define.

[1] Nguyen, H. D., Arbel, J., Lü, H., & Forbes, F. (2020). Approximate Bayesian computation via the energy statistic. IEEE Access, 8, 131683-131698.

Parameters: - statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

- base_distance (str or callable) – Can be a string denoting the kernel, or a function. If a string, only Euclidean distance is implemented for now; in that case, you can also provide an additional keyword parameter ‘beta’ which is the power of the distance to consider. By default, this uses the Euclidean distance.

- biased_estimator (boolean, optional) – Whether to use the biased (but always positive) or unbiased estimator; by default, it uses the biased one.

- base_distance_kwargs – Additional keyword arguments to be passed to the distance calculator.

-

__init__(statistics_calc, base_distance='Euclidean', biased_estimator=True, **base_distance_kwargs)[source]¶ The constructor of a sub-class must accept a non-optional statistics calculator as a parameter; then, it must call the __init__ method of the parent class. This ensures that the object is initialized correctly so that the _calculate_summary_stat private method can be called when computing the distances.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

-

class

abcpy.distances.SquaredHellingerDistance(statistics_calc, k=1)[source]¶ Bases:

abcpy.distances.DivergenceThis implements an empirical estimator of the squared Hellinger distance for ABC. Using the Hellinger distance was suggested originally in [1], but as that work did not provide originally any implementation details, this implementation is original. The estimator is based on a nearest neighbor density estimate. Specifically, this considers the several simulations/observations in the datasets as iid samples from the model for a fixed parameter value/from the data generating model, and estimates the divergence between the empirical distributions those simulations/observations define.

[1] Frazier, D. T. (2020). Robust and Efficient Approximate Bayesian Computation: A Minimum Distance Approach. arXiv preprint arXiv:2006.14126.

Parameters: - statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

- k (int, optional) – nearest neighbor number for the density estimate. Default value is 1

-

__init__(statistics_calc, k=1)[source]¶ The constructor of a sub-class must accept a non-optional statistics calculator as a parameter; then, it must call the __init__ method of the parent class. This ensures that the object is initialized correctly so that the _calculate_summary_stat private method can be called when computing the distances.

Parameters: statistics_calc (abcpy.statistics.Statistics) – Statistics extractor object that conforms to the Statistics class.

-

distance(d1, d2)[source]¶ Calculates the distance between two datasets.

Parameters: - d1 (Python list) – Contains n1 data points.

- d2 (Python list) – Contains n2 data points.

Returns: The distance between the two input data sets.

Return type: numpy.float

-

dist_max()[source]¶ Returns: The maximal possible value of the desired distance function. Return type: numpy.float

-

static

skl_estimator_squared_Hellinger_distance(s1, s2, k=1)[source]¶ Squared Hellinger distance estimator using scikit-learn’s NearestNeighbours s1: (N_1,D) Sample drawn from distribution P s2: (N_2,D) Sample drawn from distribution Q k: Number of neighbours considered (default 1) return: estimated D(P|Q)

abcpy.graphtools module¶

-

class

abcpy.graphtools.GraphTools[source]¶ Bases:

objectThis class implements all methods that will be called recursively on the graph structure.

-

sample_from_prior(model=None, rng=<MagicMock name='mock.RandomState()' id='140064218810576'>)[source]¶ Samples values for all random variables of the model. Commonly used to sample new parameter values on the whole graph.

Parameters: - model (abcpy.ProbabilisticModel object) – The root model for which sample_from_prior should be called.

- rng (Random number generator) – Defines the random number generator to be used

-

pdf_of_prior(models, parameters, mapping=None, is_root=True)[source]¶ Calculates the joint probability density function of the prior of the specified models at the given parameter values. Commonly used to check whether new parameters are valid given the prior, as well as to calculate acceptance probabilities.

Parameters: - models (list of abcpy.ProbabilisticModel objects) – Defines the models for which the pdf of their prior should be evaluated

- parameters (python list) – The parameters at which the pdf should be evaluated

- mapping (list of tuples) – Defines the mapping of probabilistic models and index in a parameter list.

- is_root (boolean) – A flag specifying whether the provided models are the root models. This is to ensure that the pdf is calculated correctly.

Returns: The resulting pdf,as well as the next index to be considered in the parameters list.

Return type: list

-

get_parameters(models=None, is_root=True)[source]¶ Returns the current values of all free parameters in the model. Commonly used before perturbing the parameters of the model.

Parameters: - models (list of abcpy.ProbabilisticModel objects) – The models for which, together with their parents, the parameter values should be returned. If no value is provided, the root models are assumed to be the model of the inference method.

- is_root (boolean) – Specifies whether the current models are at the root. This ensures that the values corresponding to simulated observations will not be returned.

Returns: A list containing all currently sampled values of the free parameters.

Return type: list

-

set_parameters(parameters, models=None, index=0, is_root=True)[source]¶ Sets new values for the currently used values of each random variable. Commonly used after perturbing the parameter values using a kernel.

Parameters: - parameters (list) – Defines the values to which the respective parameter values of the models should be set

- model (list of abcpy.ProbabilisticModel objects) – Defines all models for which, together with their parents, new values should be set. If no value is provided, the root models are assumed to be the model of the inference method.

- index (integer) – The current index to be considered in the parameters list

- is_root (boolean) – Defines whether the current models are at the root. This ensures that only values corresponding to random variables will be set.

Returns: list – Returns whether it was possible to set all parameters and the next index to be considered in the parameters list.

Return type: [boolean, integer]

-

get_correct_ordering(parameters_and_models, models=None, is_root=True)[source]¶ Orders the parameters returned by a kernel in the order required by the graph. Commonly used when perturbing the parameters.

Parameters: - parameters_and_models (list of tuples) – Contains tuples containing as the first entry the probabilistic model to be considered and as the second entry the parameter values associated with this model

- models (list) – Contains the root probabilistic models that make up the graph. If no value is provided, the root models are assumed to be the model of the inference method.

Returns: The ordering which can be used by recursive functions on the graph.

Return type: list

-

simulate(n_samples_per_param, rng=<MagicMock name='mock.RandomState()' id='140064217918416'>, npc=None)[source]¶ Simulates data of each model using the currently sampled or perturbed parameters.

Parameters: rng (random number generator) – The random number generator to be used. Returns: Each entry corresponds to the simulated data of one model. Return type: list

-

abcpy.inferences module¶

abcpy.modelselections module¶

-

class

abcpy.modelselections.ModelSelections(model_array, statistics_calc, backend, seed=None)[source]¶ Bases:

objectThis abstract base class defines a model selection rule of how to choose a model from a set of models given an observation.

-

__init__(model_array, statistics_calc, backend, seed=None)[source]¶ Constructor that must be overwritten by the sub-class.

The constructor of a sub-class must accept an array of models to choose the model from, and two non-optional parameters statistics calculator and backend stored in self.statistics_calc and self.backend defining how to calculate sumarry statistics from data and what kind of parallelization to use.

Parameters: - model_array (list) – A list of models which are of type abcpy.probabilisticmodels

- statistics (abcpy.statistics.Statistics) – Statistics object that conforms to the Statistics class.

- backend (abcpy.backends.Backend) – Backend object that conforms to the Backend class.

- seed (integer, optional) – Optional initial seed for the random number generator. The default value is generated randomly.

-

select_model(observations, n_samples=1000, n_samples_per_param=100)[source]¶ To be overwritten by any sub-class: returns a model selected by the modelselection procedure most suitable to the obersved data set observations. Further two optional integer arguments n_samples and n_samples_per_param is supplied denoting the number of samples in the refernce table and the data points in each simulated data set.

Parameters: - observations (python list) – The observed data set.

- n_samples (integer, optional) – Number of samples to generate for reference table.

- n_samples_per_param (integer, optional) – Number of data points in each simulated data set.

Returns: A model which are of type abcpy.probabilisticmodels

Return type: abcpy.probabilisticmodels

-

posterior_probability(observations)[source]¶ To be overwritten by any sub-class: returns the approximate posterior probability of the chosen model given the observed data set observations.

Parameters: observations (python list) – The observed data set. Returns: A vector containing the approximate posterior probability of the model chosen. Return type: np.ndarray

-

-

class

abcpy.modelselections.RandomForest(model_array, statistics_calc, backend, N_tree=100, n_try_fraction=0.5, seed=None)[source]¶ Bases:

abcpy.modelselections.ModelSelections,abcpy.graphtools.GraphToolsThis class implements the model selection procedure based on the Random Forest ensemble learner as described in Pudlo et. al. [1].

[1] Pudlo, P., Marin, J.-M., Estoup, A., Cornuet, J.-M., Gautier, M. and Robert, C. (2016). Reliable ABC model choice via random forests. Bioinformatics, 32 859–866.

-

__init__(model_array, statistics_calc, backend, N_tree=100, n_try_fraction=0.5, seed=None)[source]¶ Parameters: - N_tree (integer, optional) – Number of trees in the random forest. The default value is 100.

- n_try_fraction (float, optional) – The fraction of number of summary statistics to be considered as the size of the number of covariates randomly sampled at each node by the randomised CART. The default value is 0.5.

-

select_model(observations, n_samples=1000, n_samples_per_param=1)[source]¶ Parameters: - observations (python list) – The observed data set.

- n_samples (integer, optional) – Number of samples to generate for reference table. The default value is 1000.

- n_samples_per_param (integer, optional) – Number of data points in each simulated data set. The default value is 1.

Returns: A model which are of type abcpy.probabilisticmodels

Return type: abcpy.probabilisticmodels

-

abcpy.NN_utilities module¶

Functions and classes needed for the neural network based summary statistics learning.

-

abcpy.NN_utilities.algorithms.contrastive_training(samples, similarity_set, embedding_net, cuda, batch_size=16, n_epochs=200, samples_val=None, similarity_set_val=None, early_stopping=False, epochs_early_stopping_interval=1, start_epoch_early_stopping=10, positive_weight=None, load_all_data_GPU=False, margin=1.0, lr=None, optimizer=None, scheduler=None, start_epoch_training=0, use_tqdm=True, optimizer_kwargs={}, scheduler_kwargs={}, loader_kwargs={})[source]¶ Implements the algorithm for the contrastive distance learning training of a neural network; need to be provided with a set of samples and the corresponding similarity matrix

-

abcpy.NN_utilities.algorithms.triplet_training(samples, similarity_set, embedding_net, cuda, batch_size=16, n_epochs=400, samples_val=None, similarity_set_val=None, early_stopping=False, epochs_early_stopping_interval=1, start_epoch_early_stopping=10, load_all_data_GPU=False, margin=1.0, lr=None, optimizer=None, scheduler=None, start_epoch_training=0, use_tqdm=True, optimizer_kwargs={}, scheduler_kwargs={}, loader_kwargs={})[source]¶ Implements the algorithm for the triplet distance learning training of a neural network; need to be provided with a set of samples and the corresponding similarity matrix

-

abcpy.NN_utilities.algorithms.FP_nn_training(samples, target, embedding_net, cuda, batch_size=1, n_epochs=50, samples_val=None, target_val=None, early_stopping=False, epochs_early_stopping_interval=1, start_epoch_early_stopping=10, load_all_data_GPU=False, lr=0.001, optimizer=None, scheduler=None, start_epoch_training=0, use_tqdm=True, optimizer_kwargs={}, scheduler_kwargs={}, loader_kwargs={})[source]¶ Implements the algorithm for the training of a neural network based on regressing the values of the parameters on the corresponding simulation outcomes; it is effectively a training with a mean squared error loss. Needs to be provided with a set of samples and the corresponding parameters that generated the samples. Note that in this case the network has to have same output size as the number of parameters, as the learned summary statistic will have the same dimension as the parameter.

-

class

abcpy.NN_utilities.datasets.Similarities(samples, similarity_matrix, device)[source]¶ Bases:

sphinx.ext.autodoc.importer._MockObjectA dataset class that considers a set of samples and pairwise similarities defined between them. Note that, for our application of computing distances, we are not interested in train/test split.

-

class

abcpy.NN_utilities.datasets.SiameseSimilarities(similarities_dataset, positive_weight=None)[source]¶ Bases:

sphinx.ext.autodoc.importer._MockObjectThis class defines a dataset returning pairs of similar and dissimilar samples. It has to be instantiated with a dataset of the class Similarities

-

class

abcpy.NN_utilities.datasets.TripletSimilarities(similarities_dataset)[source]¶ Bases:

sphinx.ext.autodoc.importer._MockObjectThis class defines a dataset returning triplets of anchor, positive and negative samples. It has to be instantiated with a dataset of the class Similarities.

-

class

abcpy.NN_utilities.datasets.ParameterSimulationPairs(simulations, parameters, device)[source]¶ Bases:

sphinx.ext.autodoc.importer._MockObjectA dataset class that consists of pairs of parameters-simulation pairs, in which the data contains the simulations, with shape (n_samples, n_features), and targets contains the ground truth of the parameters, with shape (n_samples, 2). Note that n_features could also have more than one dimension here.

-

class

abcpy.NN_utilities.losses.ContrastiveLoss(margin)[source]¶ Bases:

sphinx.ext.autodoc.importer._MockObjectContrastive loss Takes embeddings of two samples and a target label == 1 if samples are from the same class and label == 0 otherwise.

-

class

abcpy.NN_utilities.losses.TripletLoss(margin)[source]¶ Bases:

sphinx.ext.autodoc.importer._MockObjectTriplet loss Takes embeddings of an anchor sample, a positive sample and a negative sample.

-

abcpy.NN_utilities.losses.Fisher_divergence_loss(first_der_t, second_der_t, eta, lam=0)[source]¶ lam is the regularization parameter of the Kingma & LeCun (2010) regularization

-

abcpy.NN_utilities.losses.Fisher_divergence_loss_with_c_x(first_der_t, second_der_t, eta, lam=0)[source]¶

-

class

abcpy.NN_utilities.networks.SiameseNet(embedding_net)[source]¶ Bases:

sphinx.ext.autodoc.importer._MockObjectThis is used in the contrastive distance learning. It is a network wrapping a standard neural network and feeding two samples through it at once.

-

class

abcpy.NN_utilities.networks.TripletNet(embedding_net)[source]¶ Bases:

sphinx.ext.autodoc.importer._MockObjectThis is used in the triplet distance learning. It is a network wrapping a standard neural network and feeding three samples through it at once.

-

class

abcpy.NN_utilities.networks.ScalerAndNet(net, scaler)[source]¶ Bases:

sphinx.ext.autodoc.importer._MockObjectDefines a nn.Module class that wraps a scaler and a neural network, and applies the scaler before passing the data through the neural network.

-

class